Image Credit: SimpleImages / Getty

Image Credit: SimpleImages / Getty A new staff report from the House Judiciary Committee warns that the European Union is using its Digital Services Act (DSA) to pressure online platforms into censoring political speech, satire, and commentary, regardless of legality or origin.

While publicly promoted as a framework for digital safety, the DSA enables EU regulators to threaten platforms with heavy penalties if they fail to align their moderation policies with Brussels’ ideological standards.

The investigation, led by Committee Chairman Jim Jordan, R-Ohio, stems from a 2023 incident when Thierry Breton, then EU Commissioner for the Internal Market, implied that a livestream between Elon Musk and Donald Trump could qualify as “illegal content” under the DSA.

We obtained a copy of the report for you here.

The European Commission later disavowed that warning, and Breton stepped down shortly afterward following pressure from Commission President Ursula von der Leyen. His replacement, Henna Virkkunen, has since continued to support and enforce the law’s most aggressive content controls.

According to the report, the DSA provides regulators with wide authority to demand the removal of speech that challenges their preferred narratives.

“The DSA is forcing companies to change their global content moderation policies,” the report states, adding that “European regulators expect platforms to deliver on DSA censorship demands by changing their global content moderation policies.” These demands have gone beyond Europe’s borders, compelling American tech giants to conform to European speech norms that may directly conflict with the First Amendment.

Platforms that fail to comply risk fines of up to 6 percent of their global revenue and can be temporarily shut down within the EU during vaguely defined “extraordinary circumstances” involving public health or security.

Under the law, platforms are also required to resolve disputes through “certified third-party arbitrators” who do not need to be independent from the EU bodies that approve them. This structure encourages arbitrators to side with regulators and pushes companies to self-censor before disputes even reach arbitration, especially since they must bear the cost if they lose.

Lawmakers say the legislation is being used to silence lawful political debate, particularly conservative views, as well as satire and memes. “European censors at the Commission and member state levels target core political speech that is neither harmful nor illegal,” the committee wrote. One internal EU workshop example labeled the phrase “we need to take back our country,” a routine political slogan, as “illegal hate speech.”

But it isn’t just political speech that’s being policed. “The documents also reveal that humor and satire are top censorship targets under the DSA,” with EU moderators encouraging platforms to use moderation processes to “address memes that may be used to spread hate speech or discriminatory ideologies.” The result, according to the committee, is that “platforms must censor political opinions, humor, and satire that runs afoul of the EU’s censorship regime.”

The report criticizes the DSA’s user threshold for defining “very large online platforms.” The 45 million monthly user cutoff applies strict oversight to American companies like X and Meta while allowing most European firms to sidestep similar scrutiny.

Documents obtained by the committee show that EU officials routinely pressured tech companies into adopting so-called “voluntary” content codes.

When companies declined to participate, they were often punished with official inquiries. The report highlights how the Commission opened a probe into X after it refused to use third-party fact-checkers endorsed by EU officials.

The enforcement apparatus behind the DSA includes financial threats that incentivize compliance. “The DSA incentivizes social media companies to comply with the EU’s censorship demands because the penalties for failing to do so are massive,” the report explains. “Platforms deemed noncompliant with the DSA can be fined up to six percent of their global revenue.”

The committee also reviewed internal materials from a closed-door workshop on May 7 that focused on DSA implementation.

The event, held under the Chatham House Rule, banned participants from revealing details about the scenarios discussed or identifying individuals involved.

Participants were asked how a fictional platform called “Delta” should respond to such content under DSA rules. They were instructed to consider updates to the platform’s global terms of service and to explore cooperation with “trusted flaggers” and civil society groups to identify content deemed harmful.

Company notes from the workshop described pressure to remove even lawful speech that might be viewed as offensive or harmful.

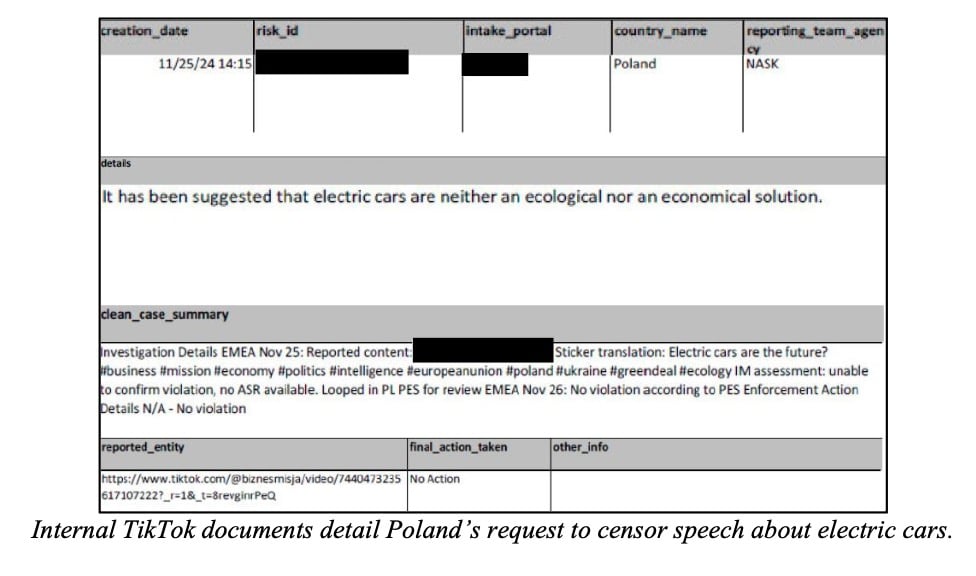

The committee cited multiple real-world examples of the DSA in action. In Poland, a state agency flagged a TikTok post that argued “electric cars are neither ecological nor an economical solution.”

French police attempted to remove a US-based post on X that “satirically noted that a terrorist attack perpetrated by a Syrian refugee may have been caused by permissive French immigration and citizenship policies.” German authorities classified a tweet calling for the deportation of criminal aliens as “incitement to hatred,” “incitement to violence,” and an “attack on human dignity.”

EU-funded organizations such as the Institute for Strategic Dialogue and Access Now were noted for pushing platforms to go beyond legal requirements and remove content they judged as contributing to hate or misinformation.

The European Digital Media Observatory reportedly criticized user-driven fact-checking systems like Community Notes, insisting that crowdsourced solutions are ineffective and inadequate for combating disinformation.

The report also raises alarms about how EU member states are using court rulings to issue global takedown orders.